Rank statistics and inference calibration

Inference calibration encapsulates a set of techniques used to quantify distribution approximation error in Bayesian approximate inference. In the context of Markov chain Monte Carlo (MCMC), a distribution approximation algorithm used extensively by statisticians and probabilistic modelers alike, numerous techniques have been developed to facilitate the process of diagnosing problems with inference (and indeed, convergence of MCMC).

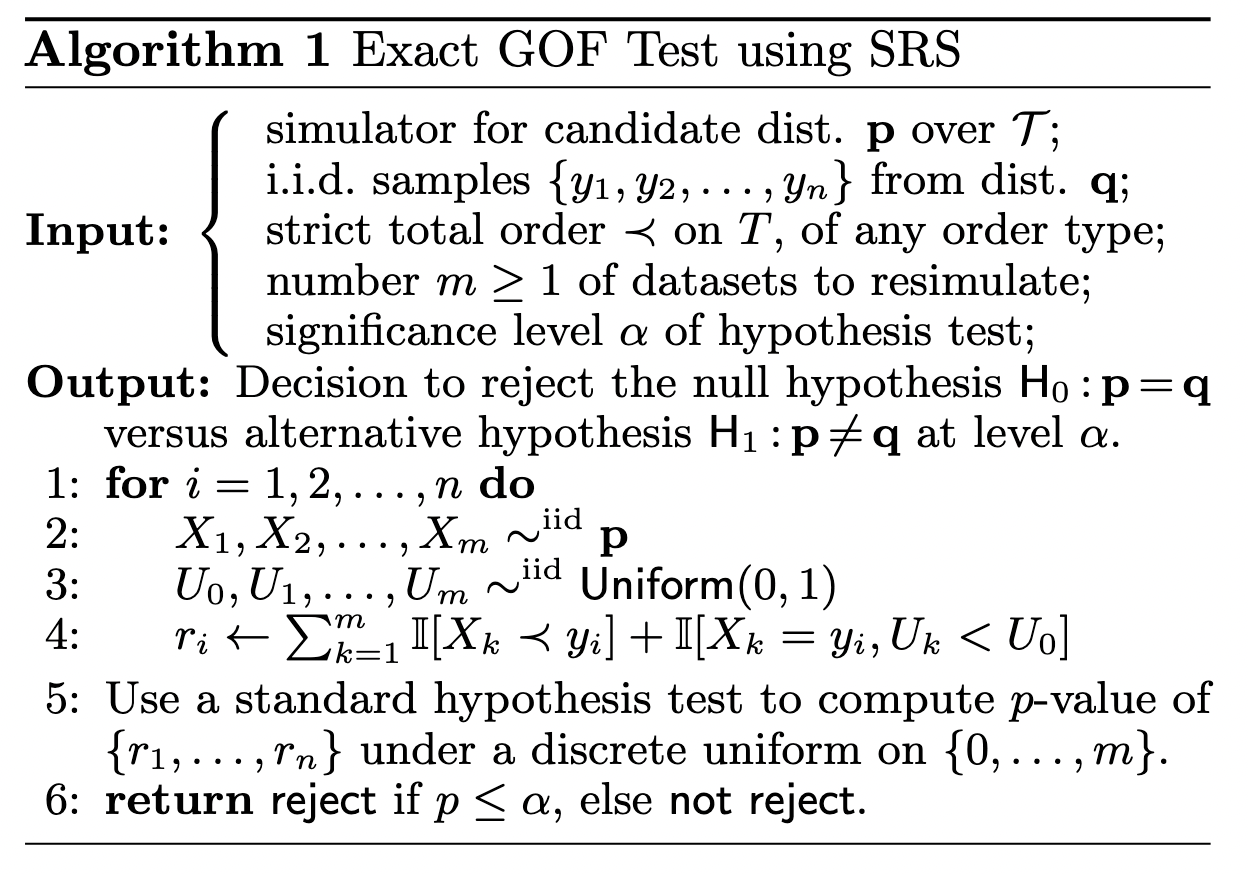

In this note, I’ll be covering this paper which develops an exact goodness-of-fit test for high-dimensional discrete distributions. Between this paper, and generalizations of a technique called simulation-based calibration (SBC), there are more than a few techniques in the arsenal for detecting distributional mismatch.

Simulation-based calibration

SBC takes advantage of the fact that samples

Given the ability to sample from the joint

Provided that this is true, repeatedly computing

The original paper on this technique is Talts et al, 2018.

Stochastic rank statistic

Simulation-based calibration was designed to handle single continuous latent inference - and generalizations (multiple marginal tests with statistical corrections - e.g. Bonferroni correction) essentially follow the same recipe. In Saad et al, 2019, the authors explore goodness-of-fit testing in the context of discrete random variables.

Structurally, this test is similar to SBC - with the addition of a tie breaking mechanism when the order relation produces a tie.